Update #9 - MAESTRO: An Agentic AI Threat Modelling Framework

MAESTRO (Multi-Agent Environment, Security, Threat, Risk, and Outcome) is a threat modelling framework designed specifically for the unique challenges of Agentic AI. Let's take a look!

Firstly, I want to start by saying that I’m writing this on a day off (I’m headed to a wedding later on!) when I had fully planned to skip this weeks update due to my current workload. However, I was so inspired by checking in after last week’s post and seeing that I’d made it to the milestone of 50 subscribers (!) on this newsletter that I felt compelled to look into something that popped up on my newsfeed a few days ago.

In recent years I’ve done a lot of threat modelling. I’ve used the more formal frameworks, like STRIDE, for application and system-level threat modelling, but the vast majority of that was using my own bespoke threat modelling approach for gauging cyber threats at the organisation level, as part of the now very popular ‘threat-led pentesting’. I’ve come to see the value of threat modelling, and seeing security from a holistic view rather than at the micro-level which is where most of my technical career has had me focus.

As such, threat modelling AI (specifically agentic AI for all it’s novel challenges) is something that I’ve known is coming round the corner for me for some time now as I delve deeper and deeper into AI security. You can imagine, then, my interest piquing when I saw a LinkedIn post the other day about a novel threat modelling framework designed specifically for agentic AI!

As far as I can tell, this is the original source for this framework, and it was created by Ken Huang who is a CEO, Chief AI Officer and a prolific author on topics like AI and Web3. He starts off by comparing the current threat modelling framework landscape and how well they fair when applied to AI. STRIDE, PASTA, OCTAVE and many others are reviewed in depth for their strengths, weaknesses, and where they fall short in capturing AI’s complexities:

“STRIDE lacks the necessary scope to address threats unique to AI, such as adversarial machine learning, data poisoning, and struggles to model the dynamic, autonomous behaviors of AI agents.“

He summarises this section by saying:

“Existing frameworks, while useful in many areas, leave significant gaps for Agentic AI. They don't adequately address the unique challenges arising from the autonomy, learning, and interactive nature of these systems.“

And calls out the unique challenge of threat modelling systems which are autonomous and, at times, unpredictable. So, to fill many of the gaps that he identified he now proposes MAESTRO, or the Multi-Agent Environment, Security, Threat Risk, and Outcome framework.

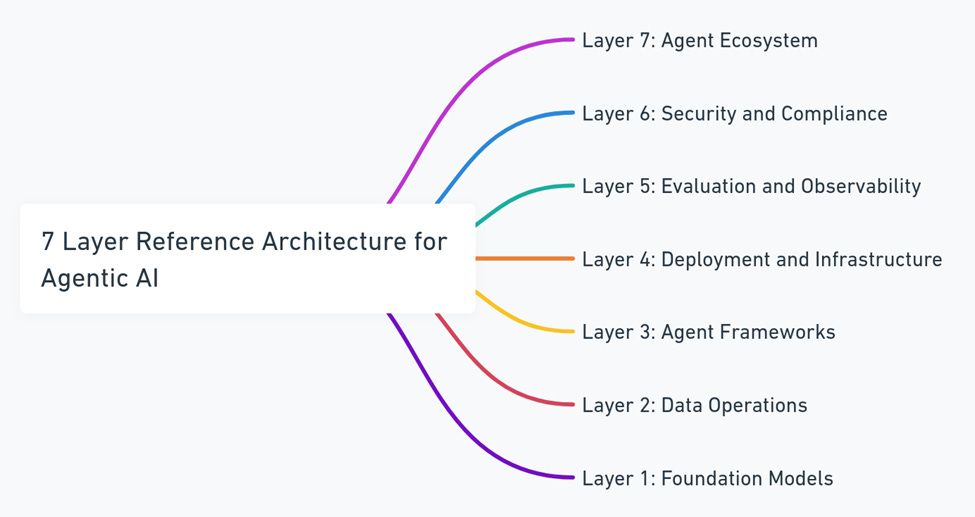

MAESTRO looks at agentic AI in 7 layers:

More detail on this 7-layer architecture can be found here. Seeing AI viewed in this way was oddly satisfying for me. I’ve long known that the only true approach to securing AI is by applying defence-in-depth like never before. Securing LLMs alone is challenging enough, but we're building AI systems and multi-agent solutions at a rate that far exceeds our ability to adequately secure them. My strong conviction is that with their increasing complexity each layer of the systems architecture must be understood, it’s risks mapped out, adversarial testing performed on it, and defensive controls applied. As such, seeing someone lay out the highest level abstraction of how agentic AI systems are architected got my mind racing for the real-world applications of this. Perhaps in the future every layer in this model will be its own security domain, with companies specialising in each like we see today with domains like privileged identity management, data loss prevention, etc.

This is where Ken leaves the theory behind and uses the model to do some threat modelling layer-by-layer. I’ll summarise a few to give you an understanding of how this could be applied in the real world.

Layer 7 - Agent Ecosystem

This is where our systems and end-users are interacting with the AI solution - think customer service chatbots all the way up to an agentic ‘SOC analyst’ solutions and more. What are some examples of the risks at this layer?

Malicious insiders compromising the agents and repurposing it for nefarious motives.

Integrations, such as the APIs that our ‘SOC analyst’ uses, being vulnerable resulting in the solution having too many permissions, leaking sensitive data or wider security issues.

Disgruntled employees manipulating AI agent pricing models to cause financial loss for their organisation.

Layer 4 - Deployment and Infrastructure

This is the layer dedicated to where our agents are being run from, such as on-premise or in the cloud. Some example threats include:

Orchestration attacks, in which the containers and clusters our agents are running on become compromised. Attackers may then have total control over the AI environment.

Lateral movement from other, perhaps less secure, areas of the organisation into this AI environment. If you were successful in phishing an AI engineer via email is that game over for your AI solution’s integrity?

Denial of service, especially for AI solutions which are driving critical business functions. Could an attacker leverage DoS techniques to consume all the AI solutions resources?

Layer 3 - Agent Frameworks

What about the frameworks which underpin our AI solutions? The toolkits, open-source libraries, commercial products and much more. Think:

GitHub runner actions abuse leading to malicious code being pushed to popular open-source frameworks like LangChain which underpin lots of AI solutions globally.

Supply chain attacks compromising organisations which rise to the top of agentic solution supply chains and dependencies.

Layer 1 - Foundation Models

These are the core AI models upon which our agents are built. What if:

Backdoor attacks or security flaws discovered in the core AI models that are inherited by every organisation using them.

Data poisoning (intentional or otherwise) during the training phase, potentially leading to core models having skewed or biased opinions.

Hopefully this gives you some idea as to just how complex trying create entirely secure AI solutions is going to be. Truly, I feel this is going to get even more complicated down the line as we leave security in the dust for competitive advantage, stakeholder pressure, an AI arms race, or whatever else is in store for us.

So, what are we to do about it? Well, personally I see my career path from here heading irrevocably toward agentic AI security and research. However, some perhaps more tangible guidance than that illusive statement is laid out by Ken towards the end of the blog, and it aligns with my own view as described earlier.

If we are to truly secure this systems we are left with no option but to understand how these layers are made up and integrate with one and another, and define and implement controls tailored to the threats specific to each layer. Some of these threats cross several layers (think security monitoring) whilst others focus on a minute threat.

We will need to challenge our assumptions using tried and tested security principles like red teaming, and try (perhaps in vain) to build security into novel solutions from the ground up. Sadly, having spent my career largely applying security principles in retrospect to a systems development and distribution I fear this particular point will be a neglected yet again. I can only imagine this will be exacerbated with the frantic adoption of AI and it’s minute-by-minute evolution. I fear, in this case, we may be rolling the dice with a technology that is uniquely dangerous if not designed with the utmost security in mind.

The very selfish silver lining for AI security enthusiasts like myself is that this is an area which I can only see becoming more and more important, so I should be kept busy in the years to come! On that slightly less cheery-than-planned note I will end this week’s update. Despite it sounding all doom and gloom, it is steps like this agentic AI-specific threat modelling framework that will continue to take us closer to this ‘secure’ AI solution design that we are aiming for. With that, a big thanks to Ken for his research in this area and for leading the charge! Catch you next week